Creating Algorithmic Tools to Interpret and Communicate Open Data Efficiently

Introducing Our Developers

As a developer at rOpenGov, what type of data do you usually use in your work?

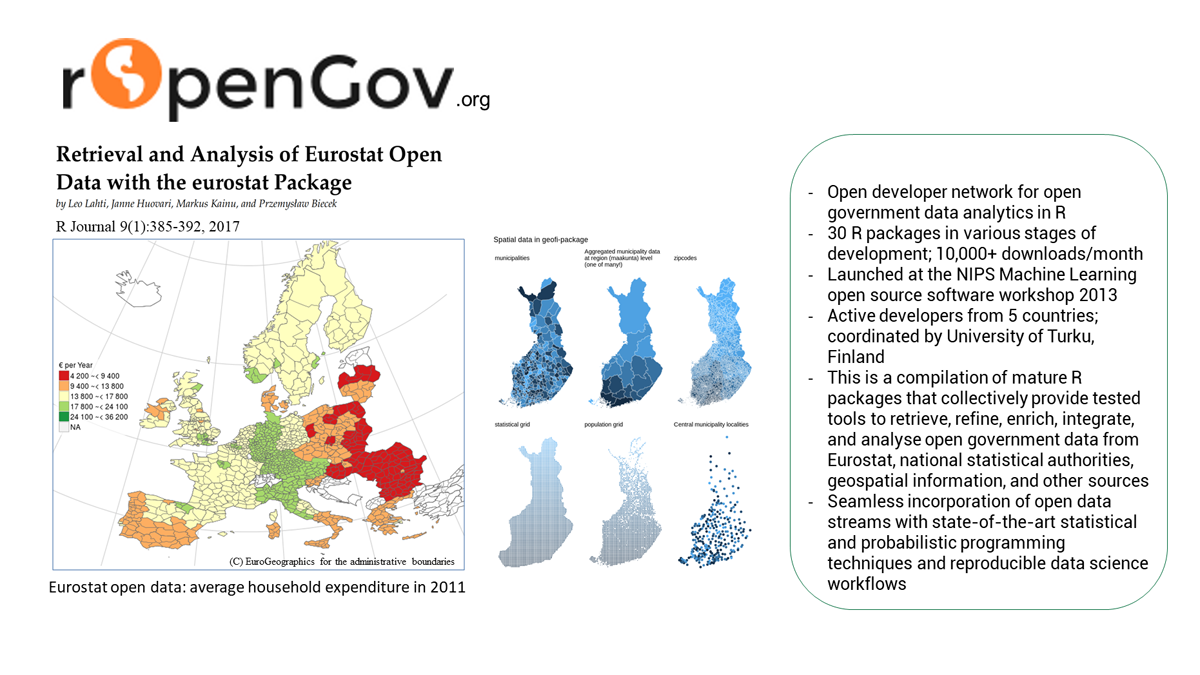

As an academic data scientist whose research focuses on the development of general-purpose algorithmic methods, I work with a range of applications from life sciences to humanities. Population studies play a big role in our research, and often the information that we can draw from public sources - geospatial, demographic, environmental - provides invaluable support. We typically use open data in combination with sensitive research data but some of the research questions can be readily addressed based on open data from statistical authorities such as Statistics Finland or Eurostat.

In your ideal data world, what would be the ultimate dataset, or datasets that you would like to see in the Music Data Observatory?

One line of our research analyses the historical trends and spread of knowledge production, in particular book printing based on large-scale metadata collections. It would be interesting to extend this research to music, to understand the contemporary trends as well as the broader historical developments. Gaining access to a large systematic collection of music and composition data from different countries across long periods of time would make this possible.

Why did you decide to join the challenge and why do you think that this would be a game changer for researchers and policymakers?

Joining the challenge was a natural development based on our overall activities in this area; the rOpenGov project has been around for a decade now, since the early days of the broader open data movement. This has also created an active international developer network and we felt well equipped for picking up the challenge. The game changer for researchers is that the project highlights the importance of data quality, even when dealing with official statistics, and provides new methods to solve these issues efficiently through the open collaboration model. For policymakers, this provides access to new high-quality curated data and case studies that can support evidence-based decision-making.

Do you have a favorite, or most used open governmental or open science data source? What do you think about it? Could it be improved?

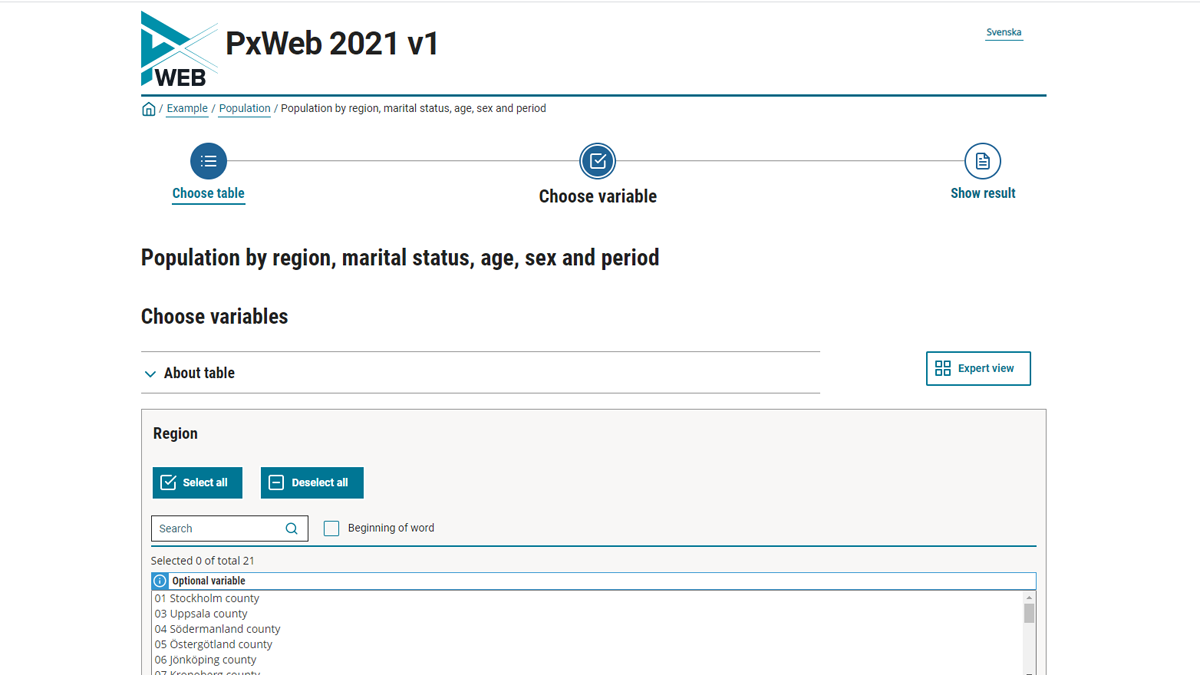

Regarding open government data, one of my favorites is not a single data source but a data representation standard. The px format is widely used by statistical authorities in various countries, and this has allowed us to create R tools that allow the retrieval and analysis of official statistics from many countries across Europe, spanning dozens of statistical institutions. Standardization of open data formats allows us to build robust algorithmic tools for downstream data analysis and visualization. Open government data is still too often shared in obscure, non-standard or closed-source file formats and this is creating significant bottlenecks for the development of scalable and interoperable AI and machine learning methods that can harness the full potential of open data.

From your perspective, what do you see being the greatest problem with open data in 2021?

Although there are a variety of open data sources available (and the numbers continue to increase), the availability of open algorithmic tools to interpret and communicate open data efficiently is lagging behind. One of the greatest challenges for open data in 2021 is to demonstrate how we can maximize the potential of open data by designing smart tools for open data analytics.

What can our automated data observatories do to make open data more credible in the European economic policy community and be accepted as verified information?

The role of the professional network backing up the project, and the possibility of getting critical feedback and later adoption by the academic communities will support the efforts. Transparency of the data harmonization operations is the key to credibility, and will be further supported by concrete benchmarks that highlight the critical differences in drawing conclusions based on original sources versus the harmonized high-quality data sets.

How we can ensure the long-term sustainability of the efforts?

The extent of open data space is such that no single individual or institution can address all the emerging needs in this area. The open developer networks play a huge role in the development of algorithmic methods, and strong communities have developed around specific open data analytical environments such as R, Python, and Julia. These communities support networked collaboration and provide services such as software peer review. The long-term sustainability will depend on the support that such developer communities can receive, both from individual contributors as well as from institutions and governments.

Join us

Join our open collaboration Green Deal Data Observatory team as a data curator, developer or business developer. More interested in antitrust, innovation policy or economic impact analysis? Try our Economy Data Observatory team! Or your interest lies more in data governance, trustworthy AI and other digital market problems? Check out our Digital Music Observatory team!